After today’s OpenAI Dev Day, I tried the new Assistants API, and the results is impressive. I am particularly interested in how it works with its retrieval function, so I decided to test its article generation capability with user’s custom documents.

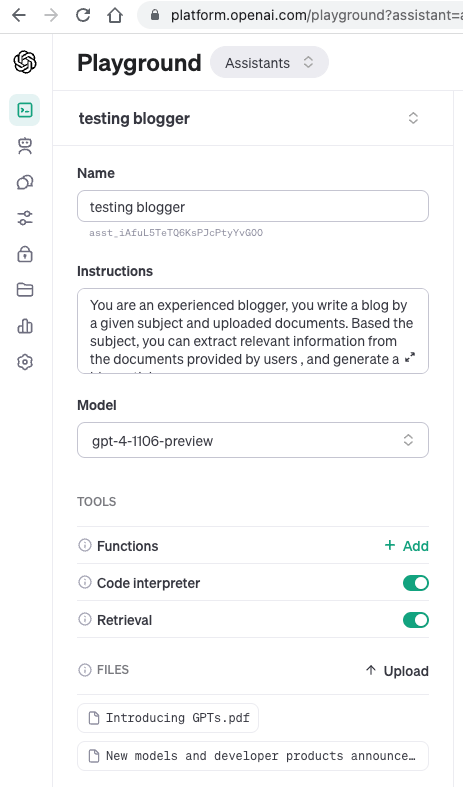

I went to OpenAI’s playground, and it already supports assistant. Nice.

On the left side of the playground web page, we can find the configurations. I input “testing blogger” as the name, in the instructions, I gave the following prompt:

You are an experienced blogger, you write a blog by a given subject and uploaded documents. Based the subject, you can extract relevant information from the documents provided by users , and generate a blog article.And I chose the new gpt-4-1106-preview as the model. I enabled both Code Interpreter and Retrieval tools.

Next, I went to OpenAI’s blog site, downloaded the two web pages into local pdf files:

https://openai.com/blog/introducing-gpts

https://openai.com/blog/new-models-and-developer-products-announced-at-devday

Then I uploaded the two pdf files to the playground and by clicking the “Upload” in the configuration section.

Now, looks like all configuration is done. Not too much work.

Let’s roll!

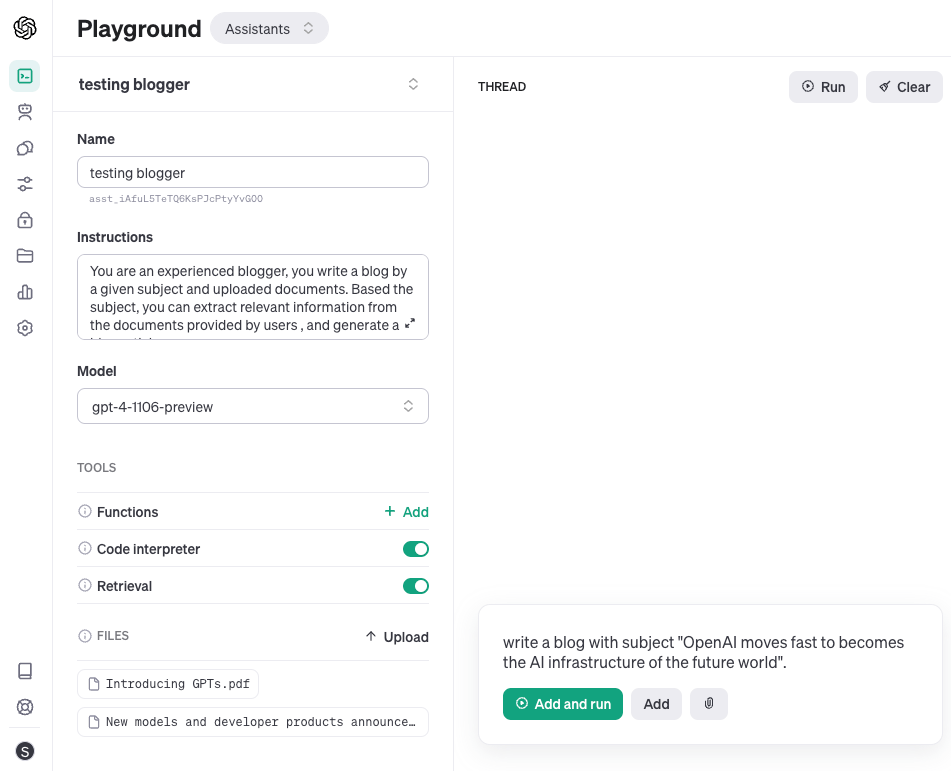

The middle section of the playground is the where users chat with assistant and get results. I gave the following instruction in the input box at the bottom:

write a blog with subject "OpenAI moves fast to becomes the AI infrastructure of the future world".

And I clicked the green “Add and run” button.

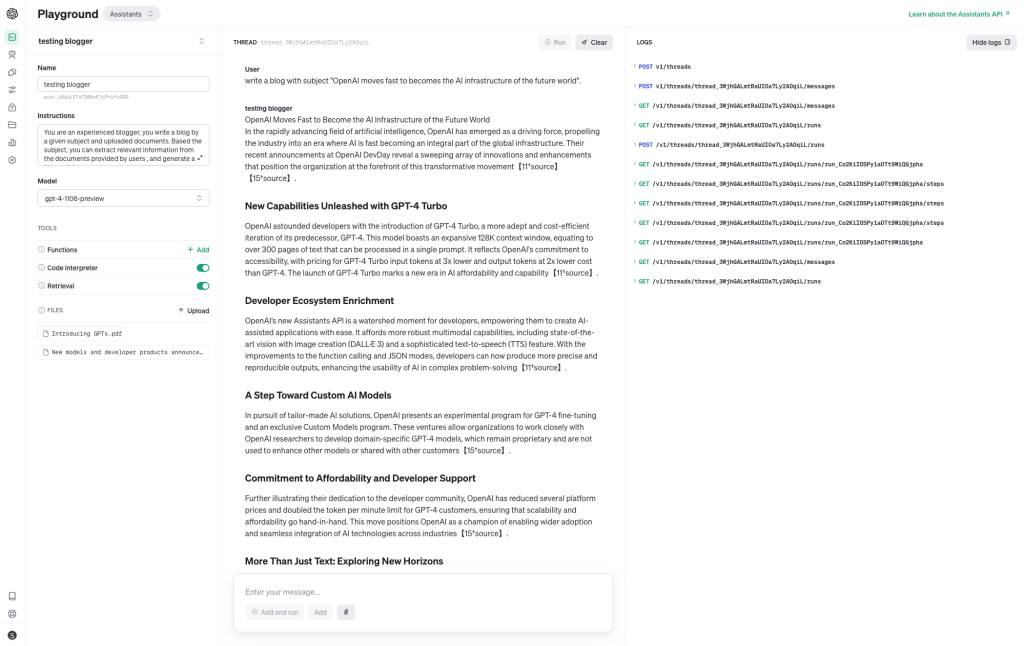

I first saw some logs popped out in the log section on the right, then after about 10 seconds, an article was generated!

I read the article, and I can tell it does use the contents from the two files I uploaded, and what it wrote is consistent with the subject. The article is not impressive, but it is Okay for a quick browsing.

At last, I asked the assistant to make a shorter summary, it did it nicely. And below is its output:

OpenAI Demonstrates AI Leadership with GPT-4 Turbo and Developer-Focused Innovations

OpenAI, the AI research and deployment company, is rapidly advancing to become the infrastructure of the future world with its latest suite of innovations. At OpenAI DevDay, the company announced GPT-4 Turbo, a game-changing model boasting more capabilities and significantly lower costs, designed to handle over 300 pages of context in a single prompt.

Eager to empower developers, OpenAI launched the Assistants API, enabling the creation of sophisticated AI applications with ease, alongside improvements in multimodal capabilities such as image creation with DALL·E 3 and a new text-to-speech feature.

To offer tailored AI solutions, OpenAI introduced a Custom Models program for organizations to co-develop specialized models, ensuring those remain exclusive and secure for proprietary use.

To support scalability, pricing across the platform has been reduced, and the token per minute limit for GPT-4 customers has been doubled. With upgrades also in automatic speech recognition and image decoding, OpenAI showcases a strong commitment to innovation, cost-efficiency, and developer support as it forges a path toward AI ubiquity and accessibility.This is an awesome tool by OpenAI. I’d say it is more like a general application building framework. It will make building AI applications much easier for developers.

But at the same time, AI start-ups will have tougher challenges of finding a way of survive. I guess many startups’ business logic assumes that OpenAI’s models provides only general functions and only have publicly available knowledge, and they add value by integrating the OpenAI’S model with other systems or with private knowledges. But we can see clearly it does not hold true with OpenAI’s recent moves. Its tools allow developers connect to other systems, and its retrieval project allows building apps on user’s private knowledge. I see OpenAI becomes more and more like an appplication framework, the only major things missing is probably a type of workflow design that suitable for working with agents. They probably already started working on it. My observations is that OpenAI is encouraging/forcing developers to work more on the business logic, and leave the infrastructure (here it means both cloud computing and AI application framework) to themselves.

Leave a comment