Behavior based Endpoint Security is one critical component to implement Zero Trust. However, alerts from endpoint are usually numerous and noisy, considering the complexity of OS, diverse types of applications running on the endpoints, and the inherent dynamic nature of user behaviors. Most time, it is extremely difficult to truly understand what is going on with a single alert from endpoints and accurately assess its risk level, without human review in a broader context, by looking into event history or correlating it with other data sources.

However, such human review is impractical if we want to run behavior based endpoint security on every employee device and every server, 24×7. The time needed for each human review and the cost will kill it in the real-life operations.

What if we can leverage the understanding ability of generative AI? What if we can use it as a cheap and unsleeping “agent” who can do the initial review of an alert whenever it happens, and assess the risk level? It may not be able to do all the needed work, but at least it can help us to filter out the noise and perform initial triage with desired latency and acceptable cost?

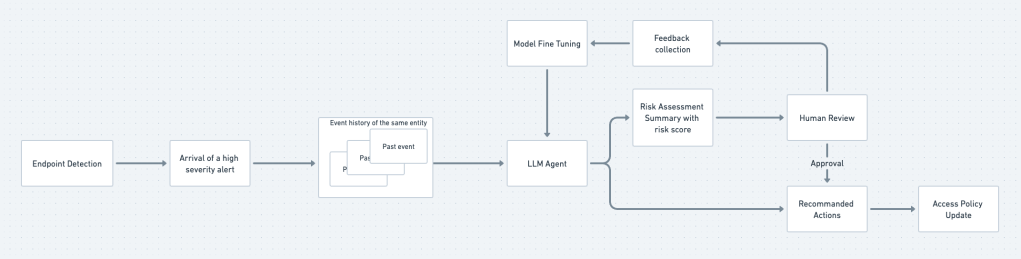

The idea is that whenever we receive an alert of high severity event about an endpoint, we retrieve a history of event of the same endpoint, with some preprocessing to only keep the most relevant information. Then we send this event history to the LLM for risk assessment and action recommendations. Human review is still necessary at the beginning, or for new type of cases, to confirm model’s output, or give human analysts’ opinions when they disagree. The results of human review will be collected, organized, and used to fine tuning the model in the future for better performance. Eventually, the model can automatically filter out most noises, and only prompt for human review for high risk cases with high confidence. The following chart illustrates the basic work flow.

The value of this idea is that it frees human resources from reviewing alerts, make it possible to integrate noisy data sources into a large-scale and real-time cybersecurity operations.

One limitation is that the LLM would still generate false negatives, which means there is a chance that it will not report really risky behaviors. Retrain the model with fresh security incident reports would help to reduce such errors.

I hope to hear what you say about this idea.

Leave a comment